![[LWN Logo]](http://old.lwn.net/images/lcorner.png)

![[LWN.net]](http://old.lwn.net/images/backpage.png)

![[LWN Logo]](http://old.lwn.net/images/lcorner.png) |

![[LWN.net]](http://old.lwn.net/images/backpage.png) |

|

Sections: Main page Security Kernel Distributions Development Commerce Linux in the news Announcements Back page All in one big page See also: last week's Back page page. |

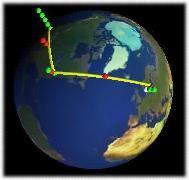

Linux Links of the Week An Atlas of

Cyberspaces is a great resource for those interested in how the net is

put together. It's full of Internet maps, graphical route tracers (like xtraceroute, shown

here), and many, many other goodies.

An Atlas of

Cyberspaces is a great resource for those interested in how the net is

put together. It's full of Internet maps, graphical route tracers (like xtraceroute, shown

here), and many, many other goodies.

Haven't had enough licensing talk? SourceForge has set up a forum dedicated to the discussion of software licenses. Section Editor: Jon Corbet |

July 13, 2000 |

|

|

This week in historyTwo years ago (July 16, 1998 LWN), the KDE/GNOME flamewars may well have hit their peak. It was no fun. Because it is 100% Open Source, because it is technically quite good, and because of the wisdom of its development team, GNOME will become the standard GUI for Linux. A large portion of the free software community will simply not accept KDE because of the Qt license. The screaming notwithstanding, KDE 1.0 was released this week. The development kernel release was still 2.1.108. Much discussion occurred on troubles with the 2.1 memory management subsystem - a conversation which continues, with many of the same participants, to this day. The 2.0.35 stable kernel was released this week. The Debian 2.0 release was in its third beta, with only 39 release critical bugs left to be fixed. Transvirtual released Kaffe 1.0. And Netscape was proclaiming the success of the Mozilla project, with a Communicator 5.0 release expected by the end of the year. One year ago (July 15, 1999 LWN) was a relatively slow time in the Linux world. The development kernel was at 2.3.10. The allegedly stable kernel was 2.2.10, but the kernel hackers were working hard to be sure that a file corruption bug was truly stamped out before releasing 2.2.11. The Debian project, meanwhile, pondered freezing the 2.2 "potato" version, with talk of a possible release in September (of 1999!). A slightly different sort of endorsement came in this week: Once I explain what Linux is, I am certain you will understand why it is important for the Christian community of computer users to embrace it. More Internet sites use Linux on their servers than any other OS. Linux is revolutionizing the information technology (IT) universe just like the early Church changed the Roman Empire in the first century AD. | |

|

|

Letters to the editorLetters to the editor should be sent to letters@lwn.net. Preference will be given to letters which are short, to the point, and well written. If you want your email address "anti-spammed" in some way please be sure to let us know. We do not have a policy against anonymous letters, but we will be reluctant to include them. | |

Date: Thu, 6 Jul 2000 18:39:30 -0400 From: "Jay R. Ashworth" <jra@baylink.com> To: letters@lwn.net CC: torvalds@transmeta.com, risks@csl.sri.com Subject: Aw, shit. I've had to disagree with some impressive people in my time, but never with Linus Himself. :-) Last weeks' Linux Weekly News quotes Linus, from the Kernel mailing list <http://lwn.net/2000/0706/a/latencylinus.php3>, concerning a debate on Linux kernel latency (and realtime extensions): > Well, I personally would rather see that nobody ever needed RTlinux at > all. I think hard realtime is a waste of time, myself, and only to be used > for the case where the CPU speed is not overwhelmingly fast enough (and > these days, for most problems the CPU _is_ so overwhelmingly "fast enough" > that hard realtime should be a non-issue). If you want to assume that raw processor speed is enough to make (hard) real-time a "non-issue", you have to be willing to bet -- your life, because that's part of what hard real-time systems are all about -- that there is not *one line of code in your entire system* that can hang against real time running, keeping the machine from responding within the prescribed latency to an external stimulus. Nothing in the user apps. Nothing in the kernel. Nothing in the device drivers. That is a *LOT* of code to verify. Given the degree of complexity of today's instruction sets, I'm tempted to say it's not possible to do it. It's certainly a lot easier if all you have to validate is the Hard-RT kernel and the code you want to run. Yes, for soft-realtime, this approach ought to work nicely. But the hard-RT guys are solving a problem that differs not merely in degree, but in *kind*, even though it may appear to be merely a more stringent application. When that limit sensor on the robot arm that's about to pin you against the wall trips, you do *not* want a hard drive spin-up (or someone's bad code) to get you killed. (And yes, I realize that if you are working on life-safety type systems, you need to be evaluating every line of your code anyway; my point is that, if you can verify that the hard-RT kernel is the only part that needs to be validated, then that's all you need to check to that level of thoroughness -- and that's a *much* shorter code path, no?) Cheers, -- jra -- Jay R. Ashworth jra@baylink.com Member of the Technical Staff The Suncoast Freenet Tampa Bay, Florida http://baylink.pitas.com +1 727 804 5015 | ||

Date: Thu, 6 Jul 2000 17:22:39 -0700 (PDT) From: Linus Torvalds <torvalds@transmeta.com> To: "Jay R. Ashworth" <jra@baylink.com> Subject: Re: Aw, shit. On Thu, 6 Jul 2000, Jay R. Ashworth wrote: > > > [ Me quoted on not being all that excited about hard-realtime ] > > If you want to assume that raw processor speed is enough to make > (hard) real-time a "non-issue", you have to be willing to bet -- your > life, because that's part of what hard real-time systems are all about > -- that there is not *one line of code in your entire system* that can > hang against real time running, keeping the machine from responding > within the prescribed latency to an external stimulus. > > Nothing in the user apps. Nothing in the kernel. Nothing in the > device drivers. Hey. I write OS's for a living. If my _life_ depended on something specific having 5usec latencies, I'd prefer not to have a hard-RT OS under it at all. There are always bugs, and branding something "hard realtime" does not make those bugs go away. Look at QNX on Mars. Yes, it worked in the end, but that was a bug _due_ to trying to be real-time. It happens. Priority inversion. Programmer error. TLB and cache worst-case schenarios that nobody thought about and never got caught in testing. If you're _that_ latency-critical, I would suggest special hardware to do the latency-critical stuff, and running a specialized app on that hardware. I'd sure prefer not to use standard PC parts, thank you very much. Use a real OS on "regular" hardware for the non-critical stuff, like the pretty pictures to control and show what's going on. > Yes, for soft-realtime, this approach ought to work nicely. But the > hard-RT guys are solving a problem that differs not merely in > degree, but in *kind*, even though it may appear to be merely a more > stringent application. "My problems are so special that I can only run my own code". Sure. But if that's _really_ the case you'd better run it in memory that the "untrusted" OS cannot even touch. And on hardware that the untrusted OS has a hard time corrupting. Either it is critical or it isn't. If it's critical, you don't stop at the OS level, you go all the way. If it isn't, then you're a _lot_ better off usually just getting standard components and just stacking the hardware in your favour (ie "too much memory, too fast CPU, too fast disk, and to hell with hard real-time"). In short, I don't think there are all that many applications where "hard realtime" makes sense in a general-purpose OS. And it sure as hell should not be an interface that somebody doing streaming video and audio should _ever_ touch. Linus | ||

Date: Fri, 07 Jul 2000 00:55:24 +0800 From: Leon Brooks <leon@brooks.smileys.net> To: editor@lwn.net Subject: Defacing websites Last week's LWN covered an article which osOpinion was kind enough to publish for me (http://www.osopinion.com/Opinions/LeonBrooks/LeonBrooks5.html). Most of the feedback has been extremely positive, but I had one chap go off at me for advocating the defacement of websites. I do indeed advocate the defacement of a website in the article, but hasten to point out that it is at the invitation of the site's owner. The principle can be illustrated by a story about Tetzel. This enterprising lad, a member of the Papal Court, was out and about hawking indulgences for the benefit of the Holy Roman Spiritual Empire. An indulgence was (is) a document purporting to remit a particular sin or class of sin, past present _or_future_. "Father, forgive me, for I am about to sin?" "...for I am sinning?" Hmmm... very Microsoft... anyway, a gentleman bought an indulgence from Tetzel permitting him to indulge in armed robbery. As Tetzel subsequently left town with his (copious) ill-gotten gains, lo, who should step from the bushes armed and dangerous but our recently accredited highwayman - who promptly absconded with said ill-gotten gains. Tetzel then had the man arrested and brought to trial, at which trial the indulgence was produced. The judge asked Tetzel to verify that his signature and hence the indulgence behind it was valid. What was a man to do? There would have been dozens of spectators present who had purchased their own indulgences. Tetzel said yes, the judge said [klonk] case dismissed. Following the same principle, and bearing in mind his record, I advocate breaking John Teztel's, sorry Taschek's, hack-me site Windows system(s) if you're sure you can. After all, he asked you to. Just be careful to make the fact public and obvious. And if you can't surely break them, avoid his site and get on with something useful. -- Linux will not get in the door by simply mentioning it... it must win by proving itself superior. We have no marketing department, our sales department is an FTP server in North Carolina and our programming department spans 7 continents. Am I getting through? -- Signal11 (/.) | ||

| ||